The state of LLM Agentic Flows

Updated from an earlier posted on LinkedIn

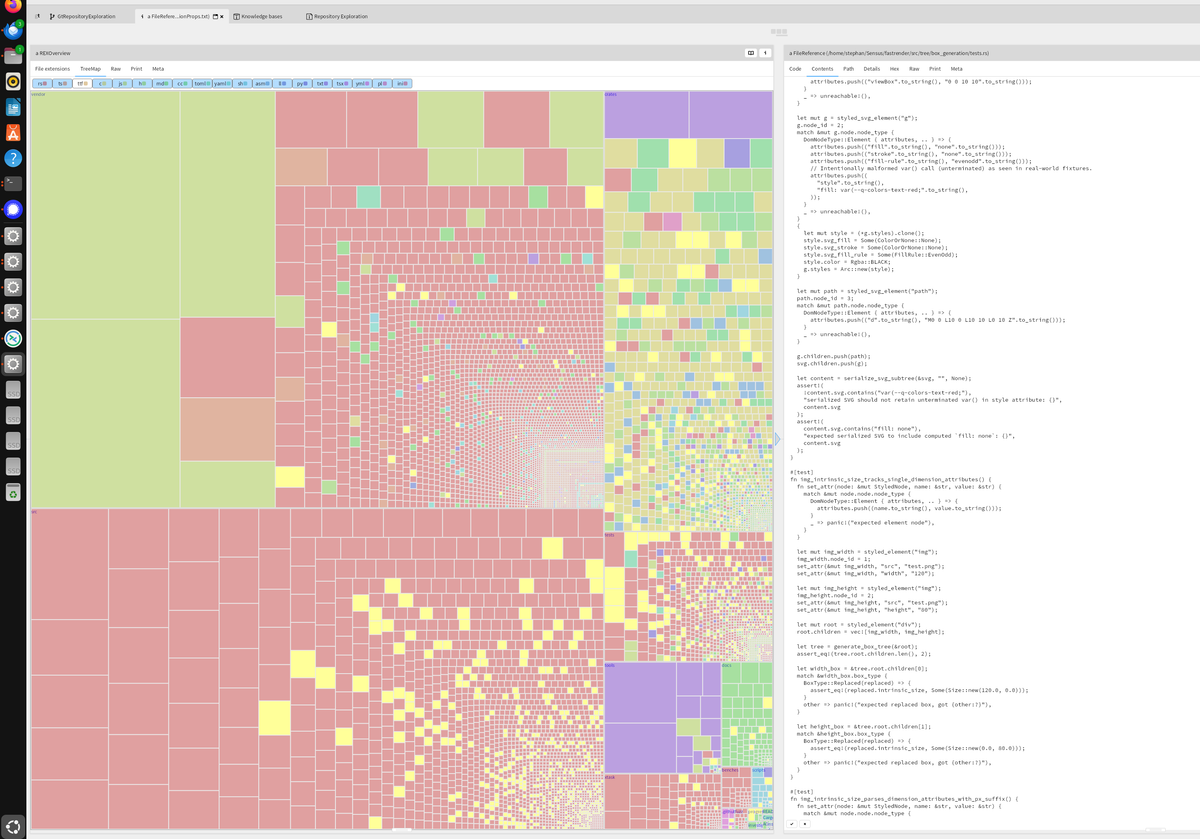

If this is state of the art at Cursor, I have some questions. The largest LLM generated Rust file in the repository is over 77000 lines. That is far too large, and shows that working with so many agents on the code base did not result in maintaining architectural constraints. As the valuation of the company depends on being able to effectively use many agents in parallel, I wonder why they posted a blog on this failure, claiming it to be successful.

The source code for the web browser that was generated.

An earlier blog post criticizing that Cursor implied success without evidence

What we are building with LLMs is still far smaller, so I wanted to see what I could determine from applying the tooling we use to keep our software architecture sound.